Compute Shader

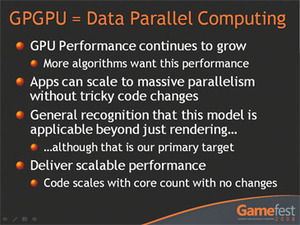

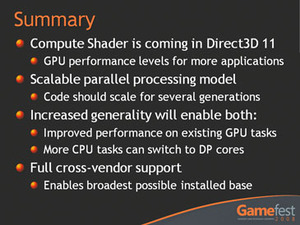

Aside from tessellation, the other major addition to DirectX 11 is the Compute Shader. This isn’t something that’s especially new; instead, it’s more a formalisation of what AMD and Nvidia have been talking about for a few years now.Nvidia in particular has been pushing its CUDA platform pretty heavily ever since the launch of the GeForce 8800 GTX in November 2006 – it’s the closest we’ve got to massively parallel computing thus far. Having said that, the current lack of cross platform compatibility that may plague CUDA’s acceptance in the wider market. That is, unless things progress into the realm of complete programmability.

What’s clear with CUDA though is that Nvidia’s architects have managed to transform the GPU from a piece of silicon that did nothing but solve graphics problems into something that can accelerate massively parallel general-purpose applications. A host of applications accelerated using CUDA are already starting to show up on the market and now even Intel is starting to take note.

AMD has been working on the same with its Stream Computing initiative and there are some consumer-class applications coming to market soon that will be accelerated by ATI Radeon graphics. Interestingly though, Microsoft’s reasoning for including a Compute Shader in DirectX 11 seems to be more focused on the gaming side of things than it is on general purpose GPU computing. However, after speaking to several industry luminaries, the Compute Shader will likely be used for more than just solving certain problems games developers face.

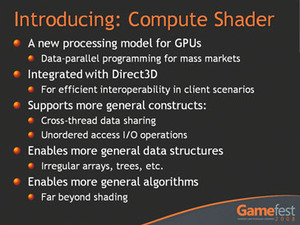

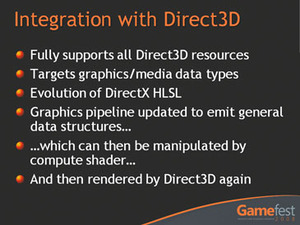

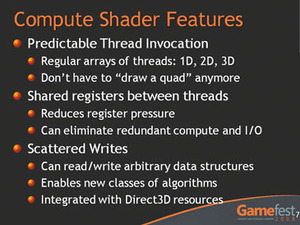

A lot of what Gee talked about during his presentation at Nvision was nothing new to me, but it is new to DirectX. Things like being able to write general-purpose code without having to use triangles, share data between threads and also handle scattered write operations are all possible with both AMD’s Stream SDK and Nvidia’s CUDA compiler, but neither have the all-important hardware agnostic compatibility at the moment. Microsoft will enable these new coding extensions and syntaxes with an updated version of HLSL (High Level Shading Language).

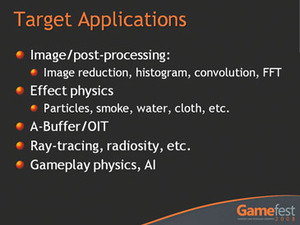

With this in mind, the graphics pipeline will be able to generate data structures traditionally associated with general purpose computing tasks handled by the CPU. Using the Compute Shader, these tasks will be able to scale across n cores as long as there is scope for the application to be that parallel. According to Microsoft, the main target applications for the Compute Shader include post processing, physics and AI amongst other things. Of those other things mentioned by Gee, one was ray tracing.

More importantly though was the mention of gameplay physics. Right now, developers are divided between the three options available: PhysX, Havok or creating their own physics engine. None of these are the ideal option in my opinion, because the developer is limited in one way or another with all three.

PhysX is only accelerated by Nvidia CUDA-enabled GPUs, but runs on the CPU while Havok is soon to be accelerated by AMD GPUs and falls back onto the CPU. Finally, coding your own physics engine takes a lot of effort and as a developer, you’re most-likely to opt for the CPU because every system your game will run on has one with a similar feature set. It’s not quite as simple with GPUs at the moment because their similarities end at API compatibility. With the Compute Shader—or OpenCL for that matter—the headaches end and the developer can just focus on implementing gameplay physics.

Having said that though, it’s going to be a while before gameplay physics are heavily integrated into games – they can’t be implemented if they’re going to break the game for anyone without the capabilities required to accelerate the physics engine. Because of this, I don’t see gameplay physics becoming a “really big thing” until DirectX 11 becomes the minimum specification for game developers.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.